This research project focus on the development of computer vision methods for creating a topological map of an underwater environment based on visual information that can be used for robots and humans to navigate.

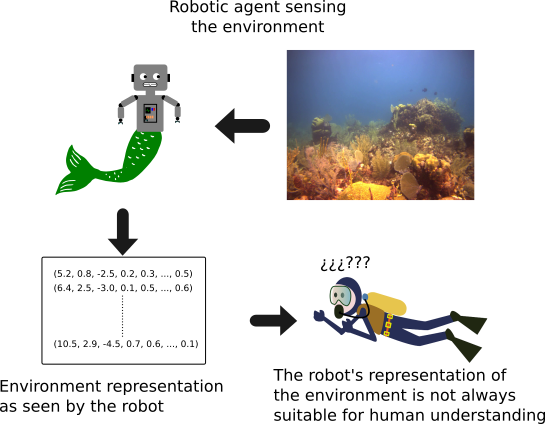

There exist several methods that can create metric maps of an environment [1,2]. A metric map is representation of the environment where the spatial relationships between features is preserved. Although, these kind of maps are very desirable for different robotic tasks, they are not always the best option, for examples when we have a dynamic environment. Also, these maps are not always easily understandable for other humans.

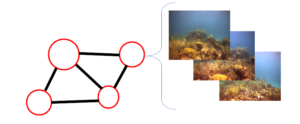

An alternative approach for representing an environment is by topological maps, which represent the environment as a graph. The map is co mposed of places (nodes is the graph) and the relations between them are captured by the edges. The information of the edges not necessarily has to be metric information, it can any kind of information that establishes a relation between nodes. An advantage of a topological map is that a node can be defined in different ways. For this research project a node is defined as a collection of images taken from the same place in the environment.

mposed of places (nodes is the graph) and the relations between them are captured by the edges. The information of the edges not necessarily has to be metric information, it can any kind of information that establishes a relation between nodes. An advantage of a topological map is that a node can be defined in different ways. For this research project a node is defined as a collection of images taken from the same place in the environment.

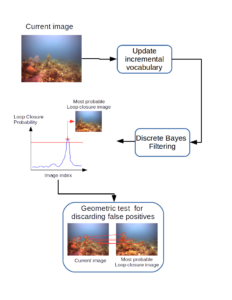

For creating the topological map we have used a Bayes Filter [3] combined with an incremental vocabulary of visual features to detect loop closures events in sequences of underwater images. We have obtained good results in terms of precision and recall.

For creating the topological map we have used a Bayes Filter [3] combined with an incremental vocabulary of visual features to detect loop closures events in sequences of underwater images. We have obtained good results in terms of precision and recall.

Moreover, we have used the topological maps created with our proposed approach [3] to help in the navigation of our underwater robotic platform using only visual information. However, more test are required to improved our results.

Aside from robot navigation using the created topological maps, we are also interested in developing a proper interface for a human diver to use the same topological map to navigate in the underwater environment. So far, we have focused on the development of a mobile application for helping a diver to navigate in an underwater environment previously explored by a robotic agent.

References

[1] Eustice, Ryan M., Oscar Pizarro, and Hanumant Singh. “Visually augmented navigation for autonomous underwater vehicles.” IEEE Journal of Oceanic Engineering 33.2 (2008): 103-122.

[2] Mur-Artal, Raul, J. M. M. Montiel, and Juan D. Tardós. “Orb-slam: a versatile and accurate monocular slam system.” IEEE Transactions on Robotics 31.5 (2015): 1147-1163.

[3] A. Maldonado-Ramírez and L. A. Torres-Méndez, “A discrete Bayes filter for visual loop-closing in image sequences of coral reef explorations taken by humans and AUVs,” OCEANS 2017: 1-5.

Recent Comments