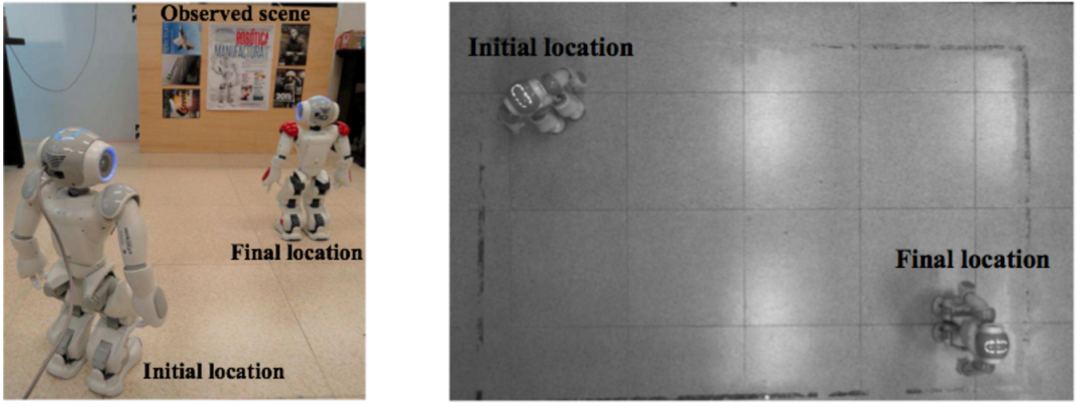

Abstract: In this paper, we address the problem of humanoid locomotion guided from information of a monocular camera. The goal of the robot is to reach a desired location defined in terms of a target image, i.e., a positioning task. The proposed approach allows us to introduce a desired time to complete the positioning task, which is advantageous in contrast to the classical exponential convergence. In particular, finite-time convergence is achieved while generating smooth robot velocities and considering the omnidirectional waking capability of the robot. In addition, we propose a hierarchical task-based control scheme, which can simultaneously handle the visual positioning and an obstacle avoidance tasks without affecting the desired time of convergence. The controller is able to activate or inactivate the obstacle avoidance task without generating discontinuous velocity references while the humanoid is walking. Stability of the closed-loop for the two task-based control is demonstrated theoretically even during the transitions between tasks. The proposed approach is generic in the sense that different visual control schemes are supported. We evaluate a homography-based visual servoing for position-based and image-based modalities, as well as for eye-in-hand and eye-to-hand configurations. The experimental evaluation is performed with the humanoid robot NAO.

Visual Servo Walking Control for Humanoids with Finite-time Convergence and Smooth Robot Velocities

Authors: Josafat Delfín, Héctor Becerra and Gustavo Arechavaleta

Recent Comments