For underwater robotics applications involving monitoring and inspection tasks, it is important to capture quality color images in real time. In the last years, there has been a great number of research that focus on the color correction or color restoration of underwater images, which differs from the color enhancement problem. The first attempts to recover the “true” color of the scene while the second tries to enhance the color and other cues but not necessarily recovering the original tones. Most of the work reported in the literature in the color restoration problem rely on approaches that take the image formation process as its baseline. However, the fact that many of the variables involved in this process are constantly changing makes it hard to model, therefore some assumptions have to be made. Other approaches consider the use of image processing techniques that are applied directly on the values of the pixels in the image. There are few work that use statistical approaches that learn the correlations between a set of color depleted and color image patches in order to recover the color in new images. Furthermore, the use of suitable color space models has demonstrated to be crucial in the color restoration process.

We have proposed a statistically learning method with an automatic selection of the training set for restoring the color of underwater color-degraded video sequences. Our method uses a Markov Random Field (MRF) model, which, as in any statistical learning method, strongly depends on a training set. In our case, to estimate the missing color in a video sequence, the training set must capture, along the whole video sequence, the correlations between a color degraded image and its corresponding color. Since we could not directly obtain true color training patches coming from the underwater scenes (i.e., either these scenes where already taken or we are not allowed to use a source of light at any time due to habitat protection reasons), we first recover the color by applying a multiple color space analysis and processing stage in a previously selected image frame of the video sequence to be used as a seed (training set) in our MRF-BP model. The adaptive training is thought in terms of the way an image frame is selected at a given moment according to the occurrence of a change in the distribution of the channel values compared to previous distributions.

Experimental results in real underwater video sequences demonstrate that our approach is feasible, even when visibility conditions are poor, as our method can recover and discriminate between different colors in objects that may seem similar to the human eye.

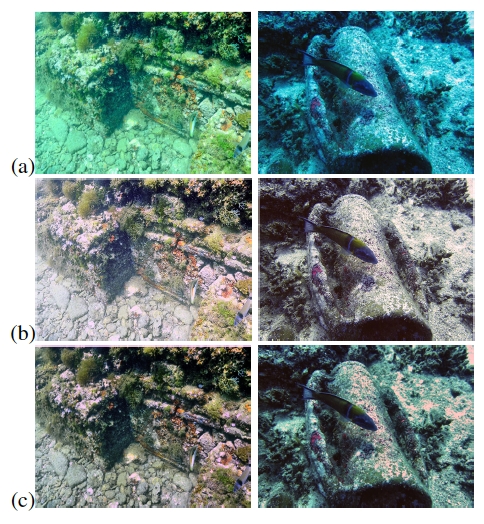

Comparison between the our AWW method and the WW assumption. (a) underwater images; (b) output using WW-Assumption (taken from [1]); and our adapted WW-assumption (c).

References

[1] G. Bianco, M. Muzzupappa, R. Bruno, F.and Garcia, and L. Neumann. “A new color correction method for underwater imaging. The International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, 40(5):25, 2015.

Recent Comments