We have developed a behavioral approach for autonomous robotic exploration of marine habitat with collision avoidance. In particular, we are interested in exploration and continuous monitoring of coral reefs in order to diagnose disease or physical damage, while avoiding collisions with fragile marine life and structure. An autonomous underwater vehicle (AUV) needs to decide in real time the best route while avoiding collisions. To achieve this, we have opted for a passive sensor, i.e. using only visual information as an input.

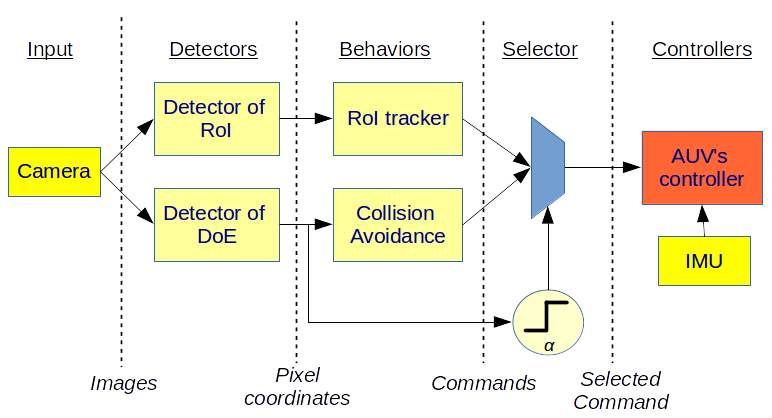

Our exploration architecture consists of two behaviors: a tracker of regions of interest and, a collision avoidance behavior. During the exploration, the robot’s navigation system is guided towards the current most relevant region while continuously checking for free space (water) to navigate. The proposed framework successfully combines both behaviors despite of their opposite nature (moving towards a region to explore it while avoiding collisions).

Overview of the proposed approach. The images from the camera are used to detect regions of interest and free space to navigate. That information is processed to define navigation actions. Taken from [1].

The Detector of Regions of Interest (RoI) uses a computational visual attention algorithm[2] for finding the most relevant feature in terms of color. To track a RoI, we need to have a descriptor that englobes its color and position. We have found that using superpixels as descriptors, a robust and fast tracking of RoIs can be achieved. For the superpixel segmentation the SLIC[3] algorithm is used.

The detector of the Direction of Escape (DoE) determines the free space regions in the image. For the case of underwater environments, water is considered as free space. Thus, any other object/region not considered as water will be an obstacle. To be able to find the free space, the image is first segmented in superpixels. Then, during a training phase, the algorithm learns the “water” superpixel features (width, height, average color and area). Once the training is performed, the collision avoidance algorithm classifies the superpixels of the subsequent images as water or non-water. After this, the centroid of the regions labeled as water, if there is at least one, is calculated. The centroid is effectively considered as the Direction of Escape (DoE). We have previously used this approach for indoor mobile robot navigation with promising results [4].

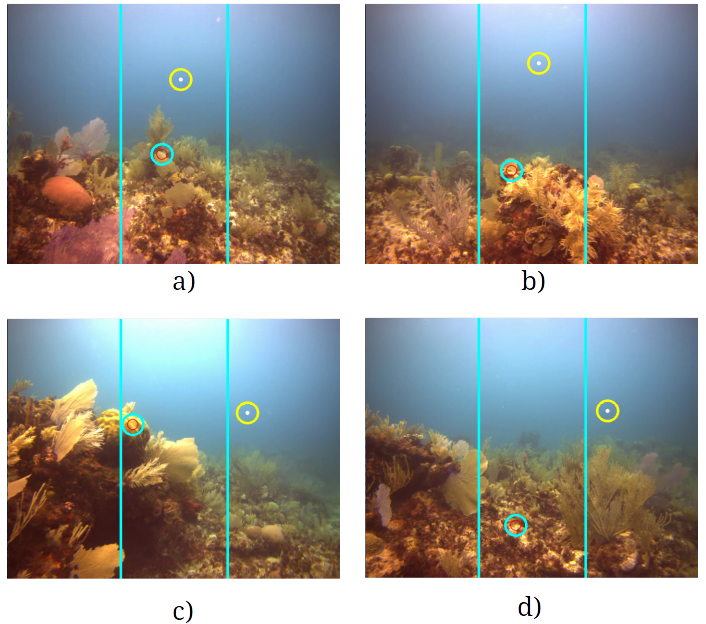

Examples of the location of the RoI (cyan circle) and the DoE (yellow circle) in an real exploration test. It can be seen that the Free Space’s centroid tends to stay in the center of the image when no obstacle is in front of the camera (a,b). When the DoE exceeds a threshold (cyan lines) a yaw command is generated to lead the robot towards the free space. Taken from [1].

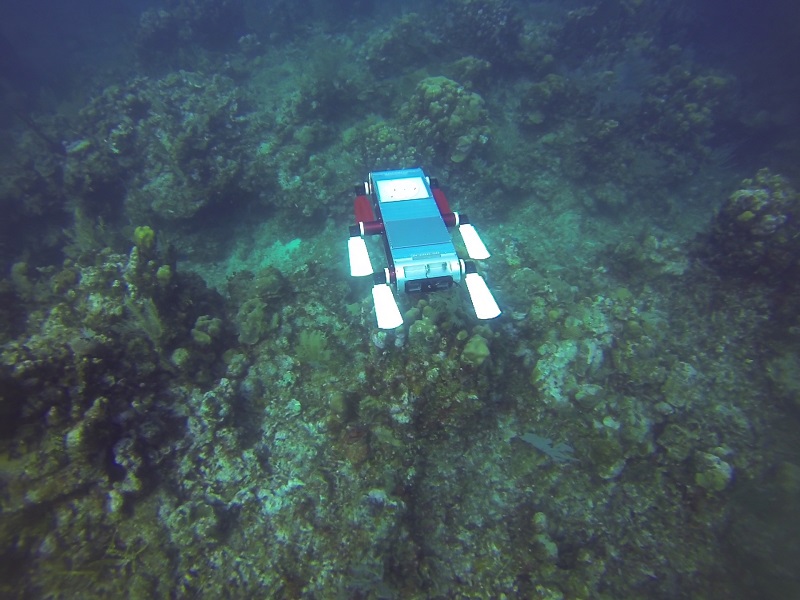

We have implemented our approach on an environment-friendly robot, which uses fins instead of propellers to allow for a non-invasive and cautious exploration. Results of sea trials performed at different locations and depths demonstrate the feasibility of our approach.

References

[1] A. Maldonado-Ramírez, L. A. Torres-Méndez and F. Rodríguez-Telles, “Ethologically inspired reactive exploration of coral reefs with collision avoidance: Bridging the gap between human and robot spatial understanding of unstructured environments,” 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, 2015, pp. 4872-4879.

[2] Alejandro Maldonado-Ramírez and L. Abril Torres-Méndez, “Robotic Visual Tracking of Relevant Cues in Underwater Environments with Poor Visibility Conditions,” Journal of Sensors, vol. 2016, Article ID 4265042, 16 pages, 2016.

[3] Achanta, Radhakrishna, et al. “SLIC superpixels compared to state-of-the-art superpixel methods.” IEEE transactions on pattern analysis and machine intelligence 34.11 (2012): 2274-2282.

[4] Rodríguez-Teiles, F. Geovani, et al. “Vision-based reactive autonomous navigation with obstacle avoidance: Towards a non-invasive and cautious exploration of marine habitat.” 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014.

Recent Comments